Rapid web app development with Devin – A Developer’s Perspective

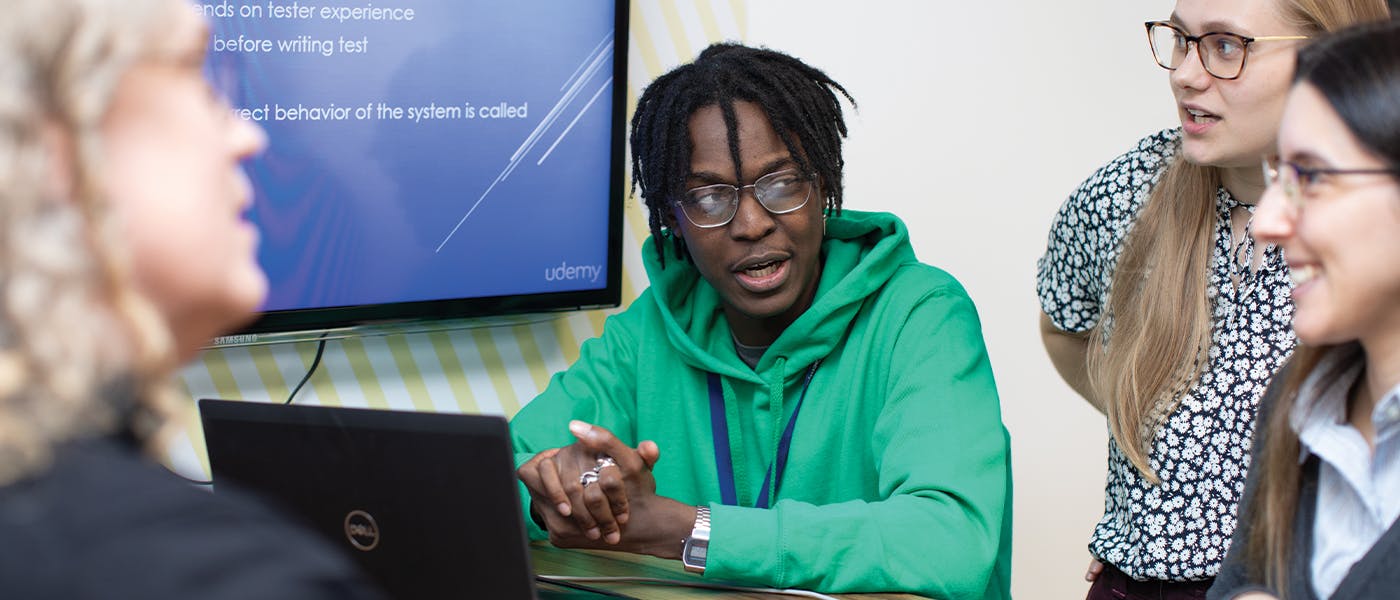

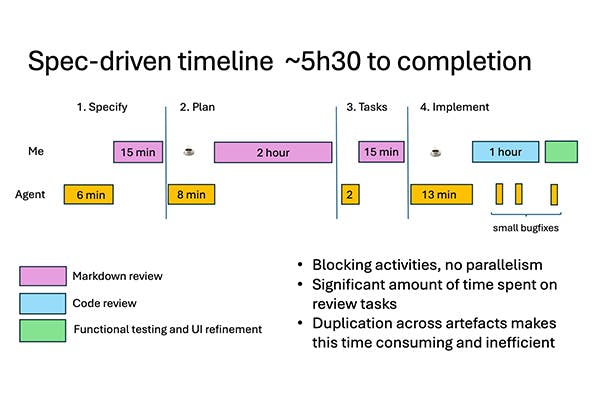

James Camilleri spent some time experimenting with AI tools for code generation – more specifically, with agentic AI. In this post, he shares insights from rebuilding a carbon emissions calculator with Devin vs Copilot – where agentic AI speeds delivery and where human engineering remains essential.

James Camilleri